Medical robotics has been used in many areas, including surgical assistance to allow surgical operations to be carried out with higher precision and less fatigue and tele-operation to enable a surgeon to conduct remote surgery without requiring their physical presence with the patient. Additionally, imaging has evolved rapidly with medical robotics over the past two decades, focused on further minimally invasive, personalized, and low-risk diagnostic and therapeutic approaches, for which medical imaging plays the role of visualizing the anatomical or physiological state of a biological subject.

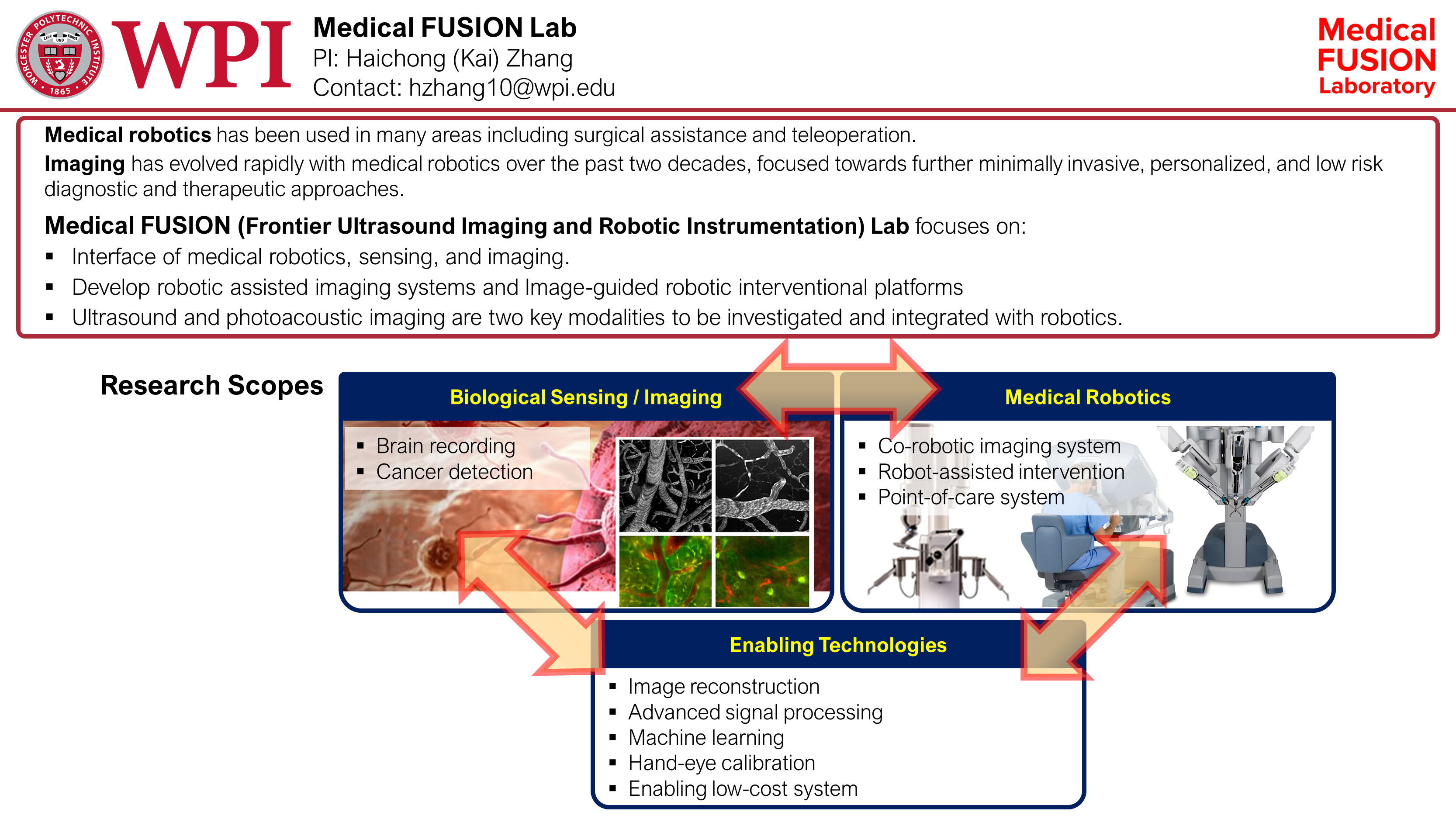

Medical FUSION (Frontier Ultrasound Imaging and Robotic Instrumentation) Lab focuses on the interface of medical robotics, sensing, and imaging and develops robotic-assisted imaging systems as well as image-guided robotic interventional platforms, where ultrasound and photoacoustic (PA) imaging are two key modalities to be investigated and integrated with robotics. Ultrasound delineates structural information and is compared favorably with other imaging modalities because of its high degree of safety, ease of use, and cost-effectiveness. PA imaging is a hybrid imaging modality merging light and ultrasound and reveals tissue metabolism and molecular distribution with the utilization of endo- and exogenous contrast agents. With the emergence of PA imaging, ultrasound and PA imaging can comprehensively depict anatomical and functional information of biological tissues.

The scope of innovation focuses on medical robotics, sensing, and imaging.

- Autonomous Robotics for Medical Imaging:

A robotic component is essential to reduce user-dependency in ultrasound scanning, to build an image with higher resolution and contrast, and to miniaturize and simplify imaging platform. - Functional Photoacoustic Imaging for Guiding Diagnosis and Therapy:

The combination of sound and light provides additional functional information for surgical guidance with high sensitivity and specificity. - Instrumentation for Image-Guided Procedures

- Human-Robot Interface Powered by AI and Ultrasound

The developed systems will synergistically improve both image quality and surgical accuracy and specificity towards diagnostic and interventional applications. To achieve this, I will form a highly interdisciplinary team consisting of individuals who are experienced in computer science, mechanical engineering, acoustics, optics, and biology.